HAR files automatically by using one of several engines, supports user-highlighting of records, storing them in a separate. Additionally, it can highlight URLs using EasyLists, it can fetch.

#Har file viewer code

This code visualizes the records by connecting together the Referer and Location HTTP headers to see the relations. And since I needed other features also, it didn't make sense to adapt any of the available tools. Usually, these files are used for performance analysis of the web pages, therefore all the other viewers didn't suit my needs to display the records in a tree-like fashion to see the relations between the elements of the webpages. HAR files (network traffic record files). I won't be getting into all the basics, but you can learn more about the project, get an introduction and intro to other use cases here.This repo contains a little piece of code that I use for analysing. With a declarative approach, it facilitates comparing a JS object to a generic model/pattern to validate and extract matches based on regex patterns. Objectron makes this task around validation, extraction, and flattening super simple.

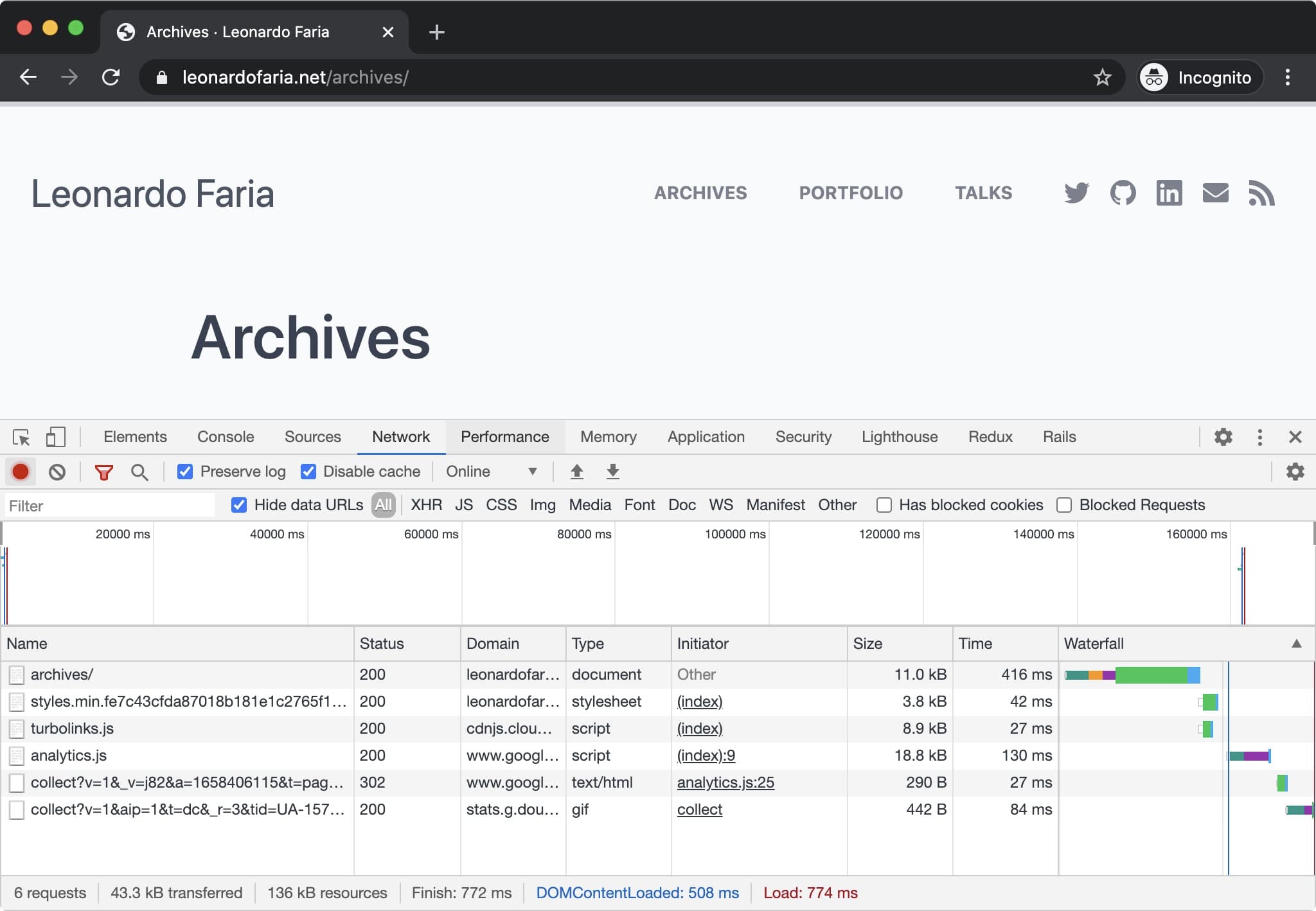

Check if the object key conforms to a specific pattern or value.Write multiple if statements to check if the entry object key has a value to begin with.Writing a custom script to do all that actually is simple but prone to a lot of repetition and writing several conditions. We might only need logs with GET requests, so that check will need to happen A log entry will need to be properly validated for having the required data, otherwise to be discarded.For each entry, validate that the needed data exists and push it into a flat object.To extract and flatten the JSON file into a CSV we'd need to: Summary of page request and response timings.Header values around cache-control, gzip and content-length.Response: status, content size, content type, cache control.Request: method, url, httpVersion, headerSize and bodySize.Most header values, with a couple of exceptionsįields that can be interesting for a given log entry:.

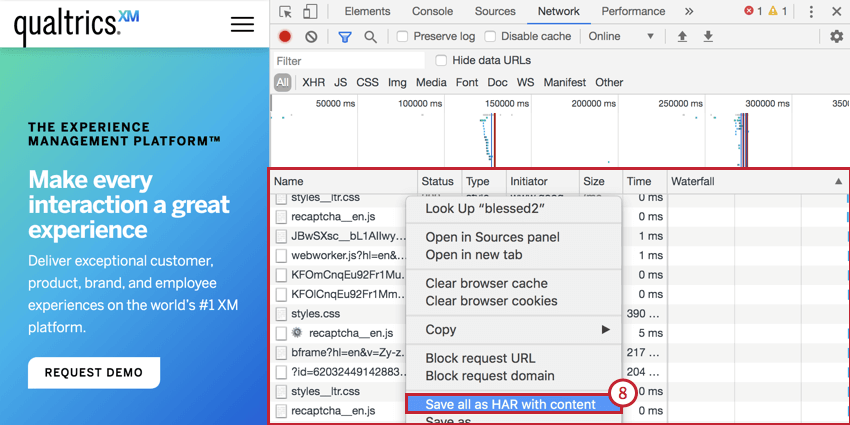

HAR fields that wouldn't be needed for analysis: This, along with flattening the structure into a table will make the contents of the file usable for plugging into analysis libraries such as pandas. The request/response logs we're looking into extracting is in log.entries Extracting and transforming HAR data to CSVįirst step, is to decide which fields, per log item, make sense to keep that will be useful in the analysis and which drop. I've created a sample HAR file from a session covering multiple pages, you can find it here.

The format is basically a JSON object with a particular field distribution. The HAR file specification is a standard file used by several HTTP session tools to export captured data. Several modern browsers allow the record and export of HTTP sessions via HAR files. The Objectron JS module will makes such task very simple to perform. While I wont be delving much into the analysis process in this article, I'll be covering the first step towards enabling that, which is extracting and transforming this data from a browser output to make it consumable by data analysis libraries such as pandas. When working on front-end web performance optimizations, there are several tools out there to help in creating performance audits, identifying opportunities and estimating potential gains.Īt many times though, I find myself needing a bit more flexibility on building out custom analysis reports from raw data, especially if the analysis involves looking at resources across multiple pages of a user journey and understanding the requests made.

0 kommentar(er)

0 kommentar(er)